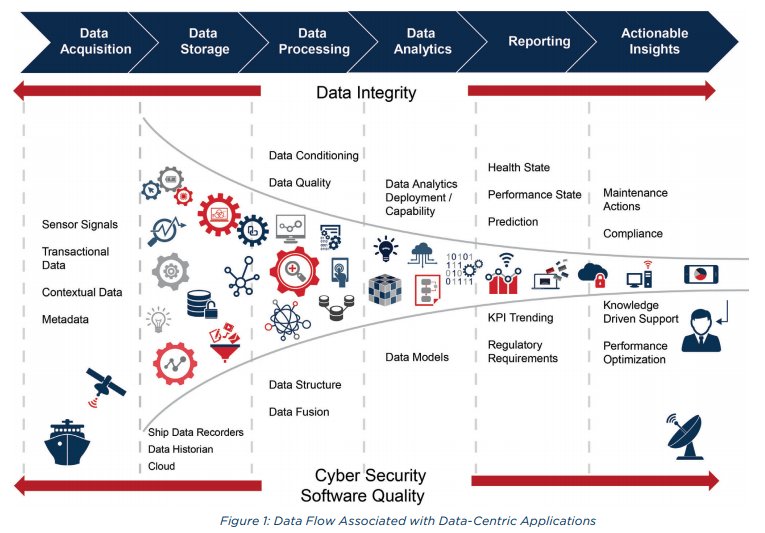

As modern marine vessels and offshore units are equipped with various functionalities for structural and machinery health monitoring, efficiency monitoring, and operational performance management and optimization, the collection of data is carried out through sensors and onboard instrumentation in order to analyze data and provide health and condition awareness, operational and crew assistance, and operational optimization. ABS issued an Advisory paper on data quality for marine and offshore applications.

In fact, high quality data is essential in these applications for ensuring accuracy and confidence in analytical results and decision making. Data quality assessment, monitoring, and control are key elements in the data flow associated with such data-centric functions.

[smlsubform prepend=”GET THE SAFETY4SEA IN YOUR INBOX!” showname=false emailtxt=”” emailholder=”Enter your email address” showsubmit=true submittxt=”Submit” jsthanks=false thankyou=”Thank you for subscribing to our mailing list”]

With the rapid rise of digital technologies, connectivity and data processing and analytics, data-centric applications, such as Smart technology, are becoming increasingly common in the marine and offshore industries. To ensure a reliable and efficient data flow and accurate analytics outcomes, data quality must be managed throughout the data lifecycle. Data quality assessments, monitoring and control are the key elements in the data quality management cycle. The main purpose is to ensure high quality data that fits the needs of data analytics, enabling data driven functions and decision making.

According to ABS, the main challenges that face the maritime industry with regards to data quality for IoT data are

- Collecting quality data is a challenge in the marine and offshore environments; Marine and offshore environments and operations have unique variables such as noise, dust, temperature, humidity, electronic and magnetic interference, and location since they are typically far from land-based infrastructures. This has a significant impact on the performance, reliability, and longevity of the sensors, cables, and data communication and storage devices. Data collected, transmitted, and stored in these severe environments and operations may potentially have significant quality issues, such as data loss, invalid values, transmission delay, incorrect timestamp order, among others.

- Data quality for IoT data is highly dependent on the data processing quality; Data acquisition and pre-processing occurs when data is collected and converted into a desired form for future analysis. Data integration and transformation consolidates and combines data from different data sources and ensures data is compatible with the structure of the target data usage. Marine IoT data is typically collected through diverse onboard sensors and data sources with various hardware and software specifications, data format and structures, time intervals, and data definitions, which increases the difficulty of effectively integrating, cleansing and mapping the data.

- Data quality in marine and offshore applications requires domain support: Data quality is not only an IT/data science task. The commercially available automated data profiling tools and software can only help to discover generic data quality problems such as data type mismatch. Data quality assessment, monitoring and control should be considered in the context of the data applications and the overall implementation objectives including business goals. The involvement of business and technical subject matter experts is critical in the process to successfully design, develop and improve the data quality validation rules and means for measuring, monitoring and improving data quality. The usage of domain knowledge is mainly needed to define data quality rules or violations.

- Data quality awareness and knowledge in the marine and offshore industries is not fully mature: Improving data quality requires a cultural shift within organizations. To improve maturity, managerial accountabilities should be established in organizations to build a culture that values quality data. Establishing a process management cycle can help in data quality awareness and improvement in marine and offshore industries.

Some of the key elements of the process management cycle for improving data quality, according to ABS, are

- Identification of critical data issues and business rules

- Evaluation of data against expectations

- Identification and prioritization of opportunities for improvement based on the findings and feedback from stakeholders

- Measurement, monitoring and reporting on data quality

- Improvement of data quality by incorporating incremental changes in the business cycle, such as installing an improved data collection system,

- Integration of data quality controls into business and technical processes to prevent issues from recurring.

The Advisory aims to help those who are facing such challenges and to “assist in driving the industry towards better and more reliable data-centric applications.”

Notably, the Advisory aims to provide an overview of the relevant standards and industry best practices and further offer general guidance and recommendations on data quality assessment, monitoring and control as applied to marine and offshore applications, with a particular focus on

- Overview of international data quality assessment standards

- Data quality assessment approaches

- Recommended framework for data quality assessment, monitoring and control

- Review of typical data quality capabilities available in commercial products.

ABS notes that “data quality criteria is often applied in areas such as business intelligence, customer relations, and numerous applications in the medical and finance industries;” thus the Advisory focuses on marine and offshore applications and looks primarily at how to apply data quality standards to time-series data generated by onboard sensors, measurement instruments, and automation systems.

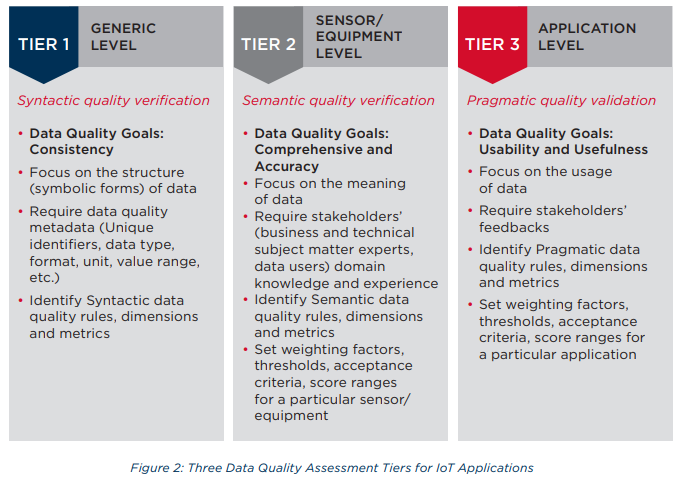

To assess the data quality in each tier, two common approaches; top-down and bottom-up, along with the detailed procedures and activities of data quality assessments and controls are defined. ABS notes that in order to get value out of the data, there are four important aspects which need to be managed properly within organizations:

- Define data quality expectations; data quality validation rules need to be defined clearly and documented consistently based on application goals and requirements, against which data quality can be validated. Data quality validation rules need to be reviewed continuously in order to determine if the rules need to be refined.

- Develop a measurement system; a hierarchy of data quality measurement methods is recommended for measuring data conformance to the defined rules. Data quality results should be quantified and reported at multiple hierarchical level, such as data quality metrics, dimension and overall levels.

- Identify improvement opportunities; the root causes of data issues need to be investigated to determine why and where the data defect originated. A remediation and improvement plan should be developed to address the root causes and to prevent the issue from recurring.

- Establish controls and report conformance to requirements; operational data quality controls help prevent root causes from recurring and eliminate simple errors from occurring. An operational data quality control plan should be defined upfront. Data quality reporting helps the data consumers understand the ongoing condition of the data against the defined rules.

Further to this, in May, ABS published the Guide for Smart Functions for Marine Vessels and Offshore Units (Smart Guide) to assist marine and offshore owners and operators leverage their operational data. The Smart Guide introduces a class approach leading to the marine and offshore industries’ first set of notations to help owners and operators qualify and use smart functions.

To explore more about the ABS Advisory, click on the PDF bellow.